Genesis

This research project is a result of my master's practical project in Media and Interaction Design at HEAD-Genève, exploring intuitive and ambient ways of engaging with technology beyond conventional interfaces like the mouse and keyboard.

Roles

Interaction Design

Prototyping

User Testing

Timeframe

6 months—Dec 2024 to May 2025

Tutor

Dominic Robson

Assisting Teachers

Pierre Rossel

Daniel Sciboz

Douglas Edric Stanley

Photography

Sylvain Leurent

Introduction

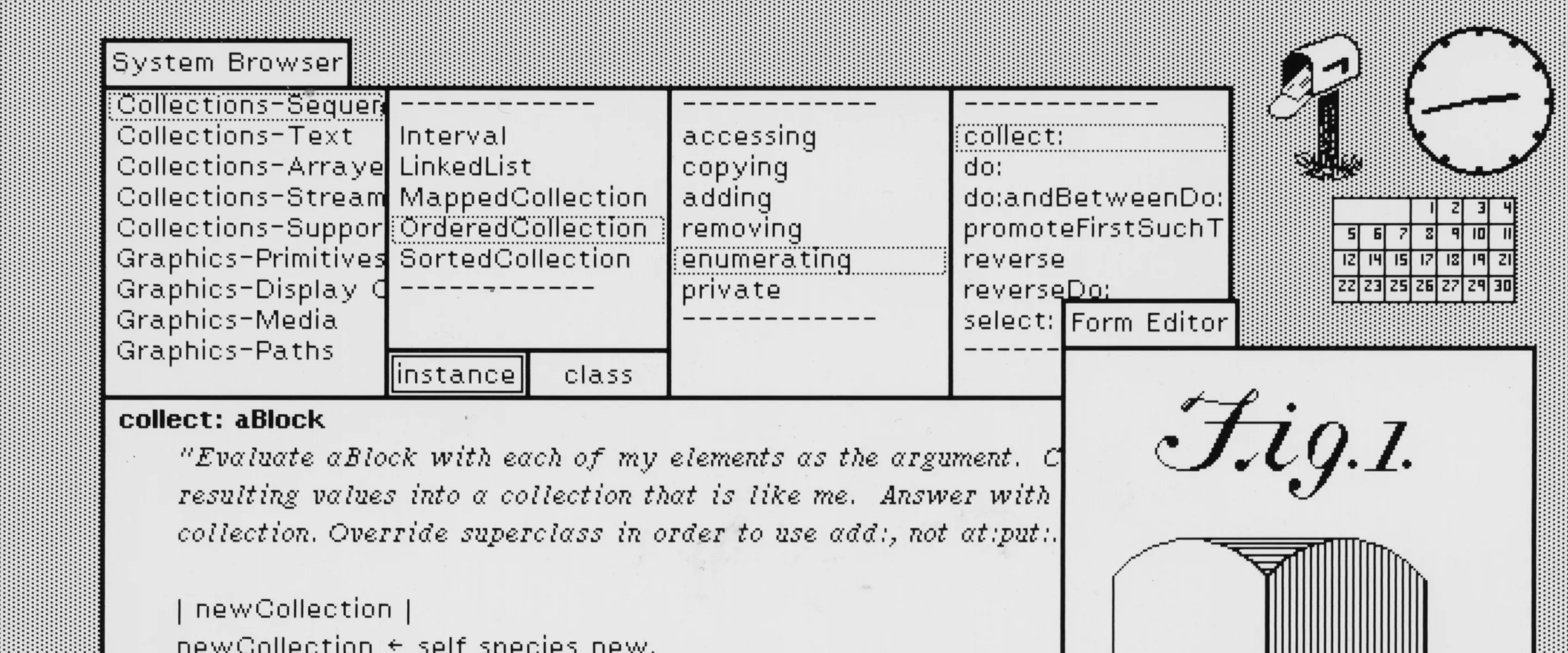

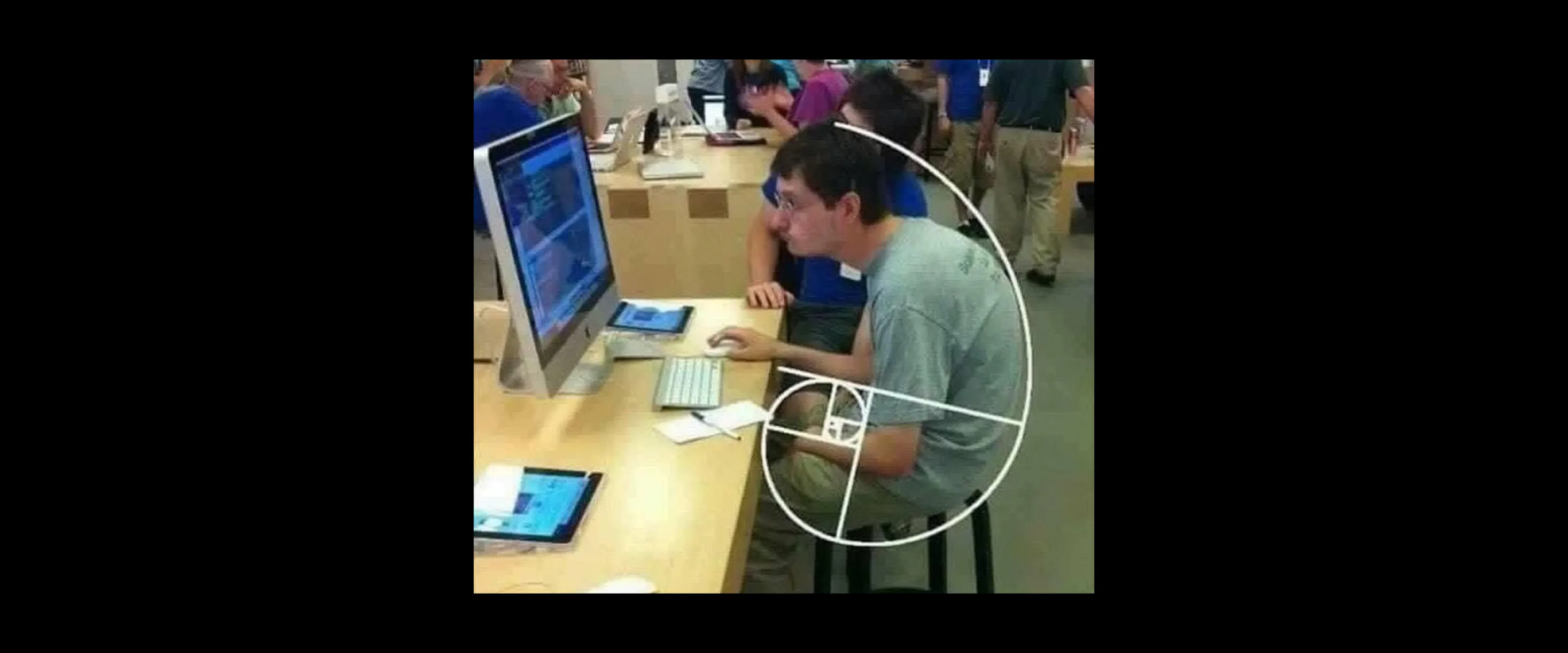

Much of how we use computers today still follows patterns we inherited in the 1980s, folders, icons, graphical desktops.

These ideas, developed in places like Xerox PARC, made early computers more accessible. But they also set a foundation that hasn't changed much since.

Because decades later, we're still stuck there!

Still typing into rectangles.

Still saving files into boxes.

Still sitting, alone, hunched forward, clicking.

Genesis

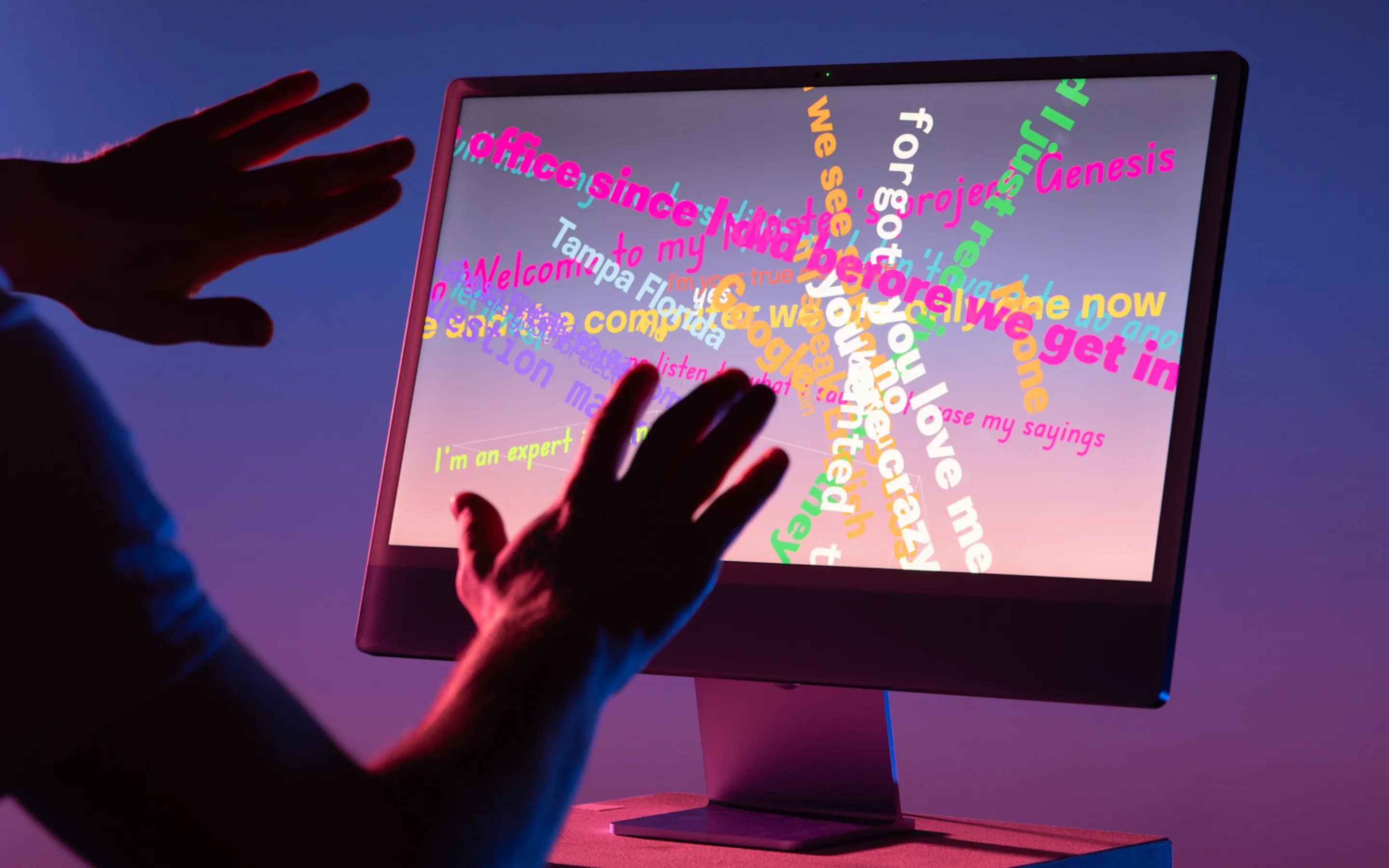

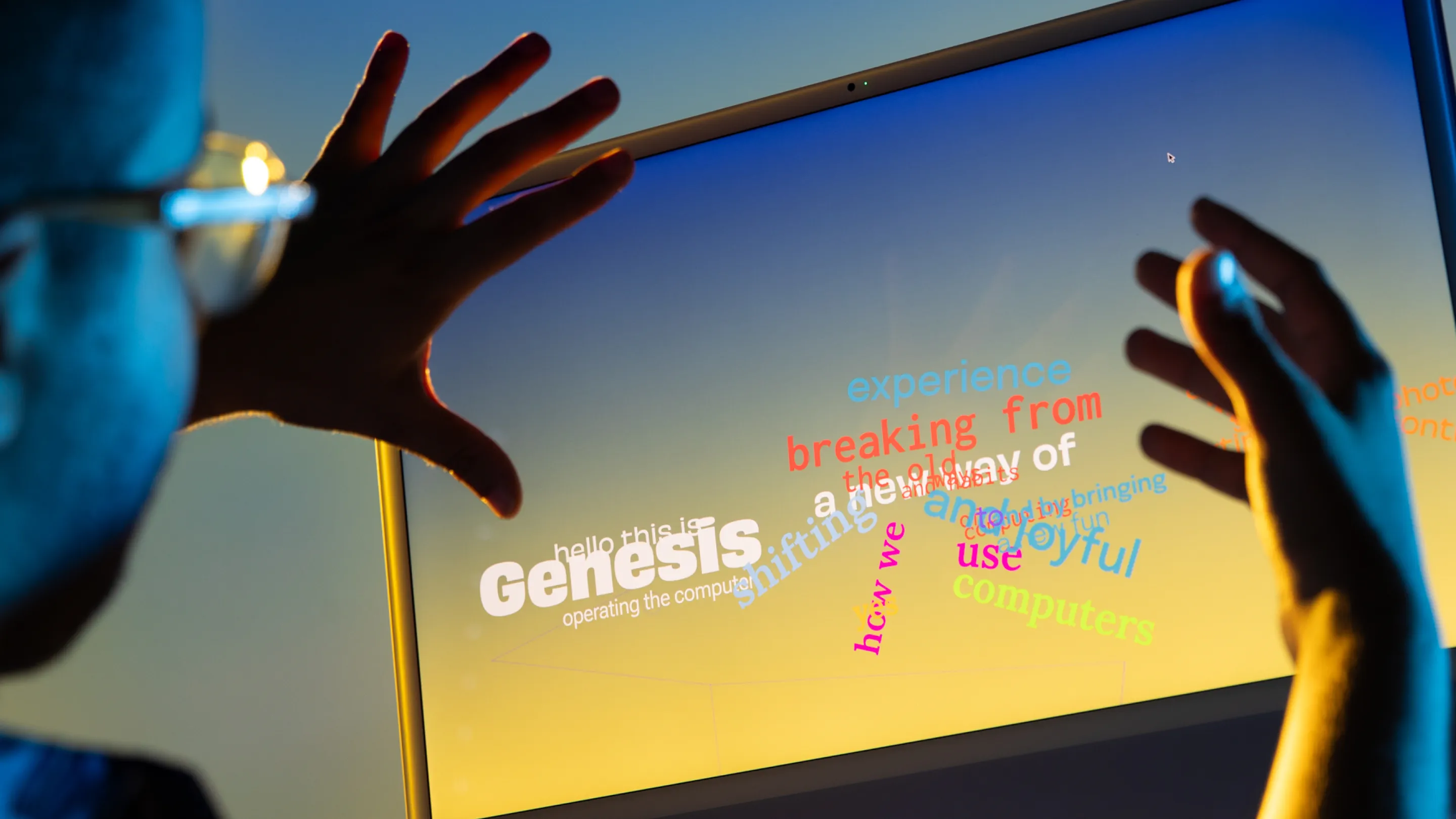

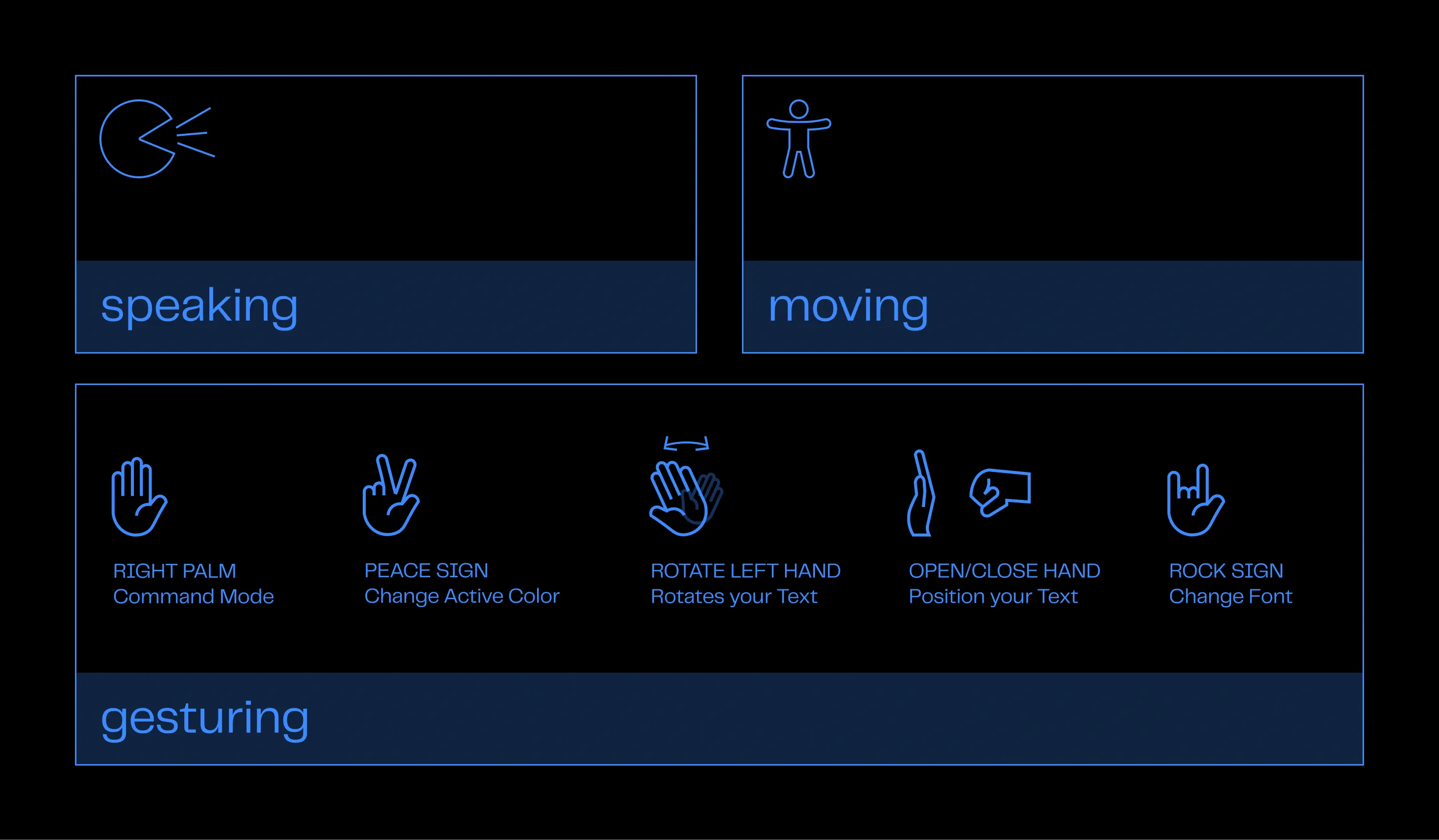

Genesis opens up new ways of interacting with computers, not through fixed commands or conventional interfaces like a mouse and keyboard, but through movement, voice, and playfulness.

As you speak and gesture, the computer responds with interpretation rather than precision. Words appear, drift, shift, and echo.

Your body becomes part of the writing process, shaping language in space, allowing you to engage in a kind of spatial conversation where thoughts unfold across the screen, spoken, placed, and rearranged, forming a living memory shaped by presence.

Rooted in Human-Computer Interaction, the project challengesthe rigidity of conventional computing by exploring more intuitive, ambient, and embodied ways of engaging with machines, beyond the desktop environment...

...inviting us to rethink how we communicate with computers.

Context

Most of us still use computers in very similar ways;

One person.

One screen.

One keyboard.

One mouse.

But that's not how we think and that's not how we create! Humans move, gesture, speak and collaborate. Yet most digital environments ask us to sit still and type...

Why

The initial motivation came from a recurring frustration. Every time I sat down to think, to explore ideas and to work through complex thoughts, I found myself constrained by these neat, tidy boxes we call windows and applications.

And I began to ask myself: Why do we accept that interactions with a computer must happen through a keyboard and a mouse?

The Research

I'm not the first to ask these questions, of course.

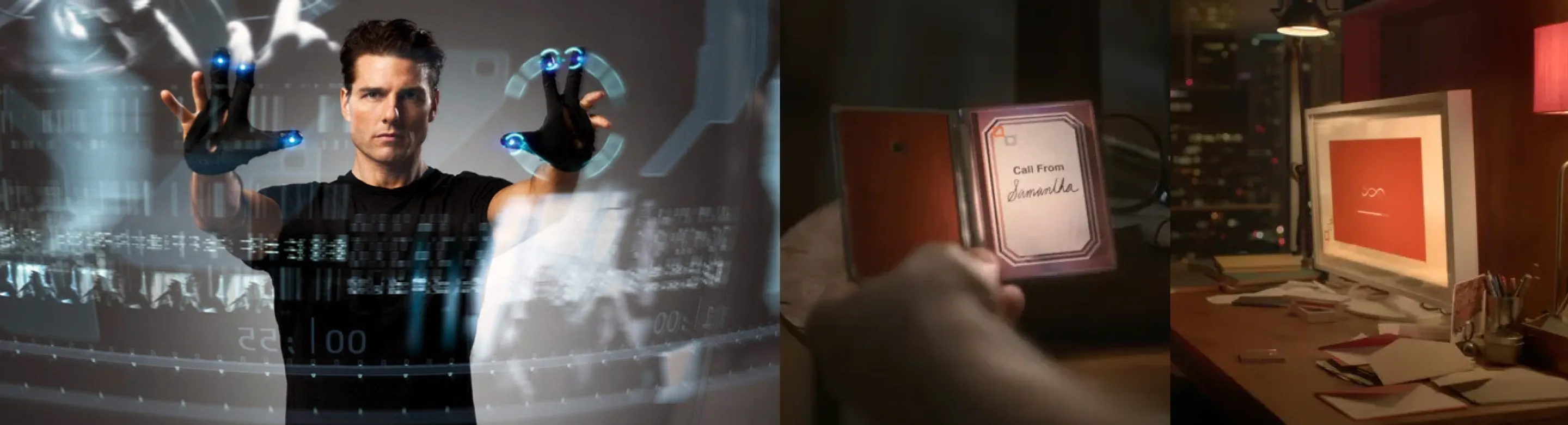

In the 1980s, the Architecture Machine Group at the MIT tapped into science fiction dreams of communicating with machines by talking and pointing.

But there's also a long history of artists and designers experimenting with more expressive forms of interaction, like Re:MARK (2002) by Golan Levin and Zachary Lieberman, where speech becomes a visual performance, or Messa di Voce (2003), where voice and body create playful, reactive environments.

And more recently, tools like Procession by Spatial Pixel Studio have asked how we might program and design using our hands, voice and AI, not just through lines of code.

We also seen so many examples of futuristic interactions with computers in Hollywood, like in (the really bad) Minority Report (2002) and Her (2013).

Research Direction

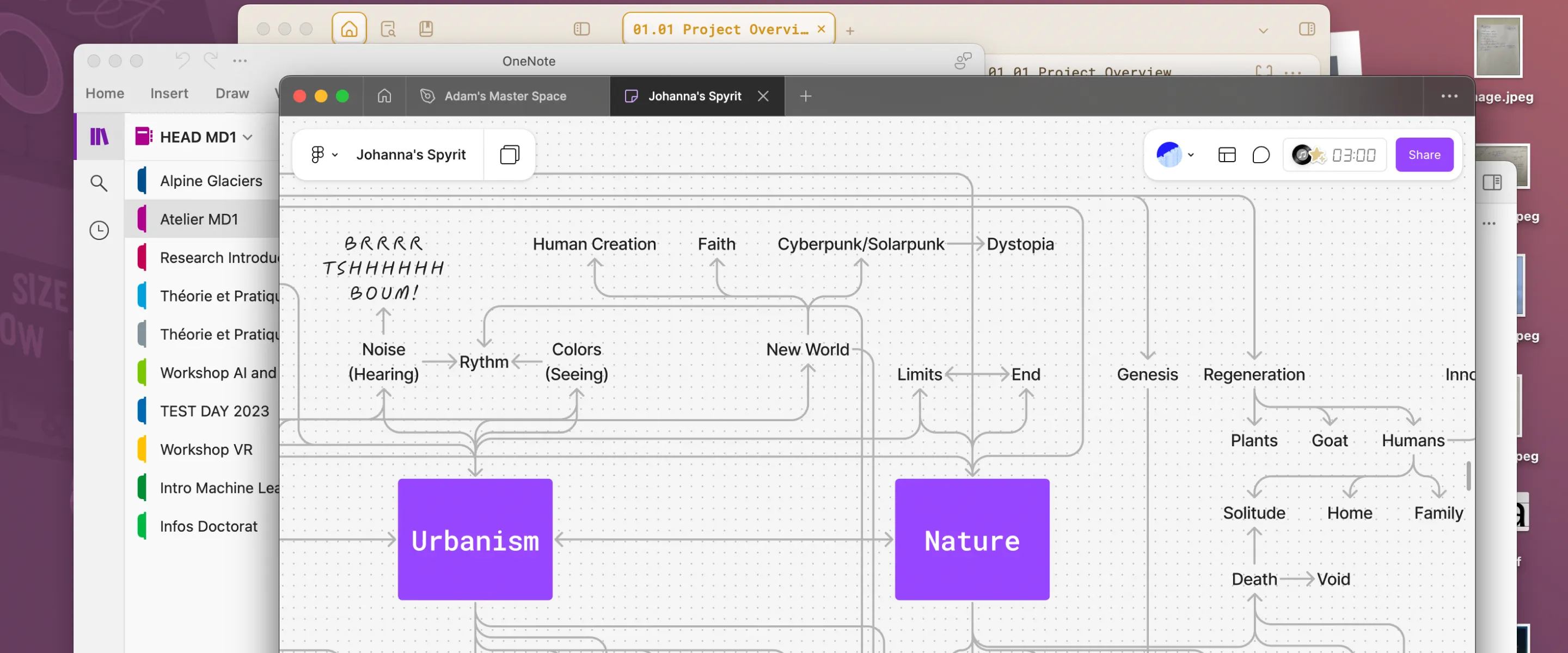

Playfulness

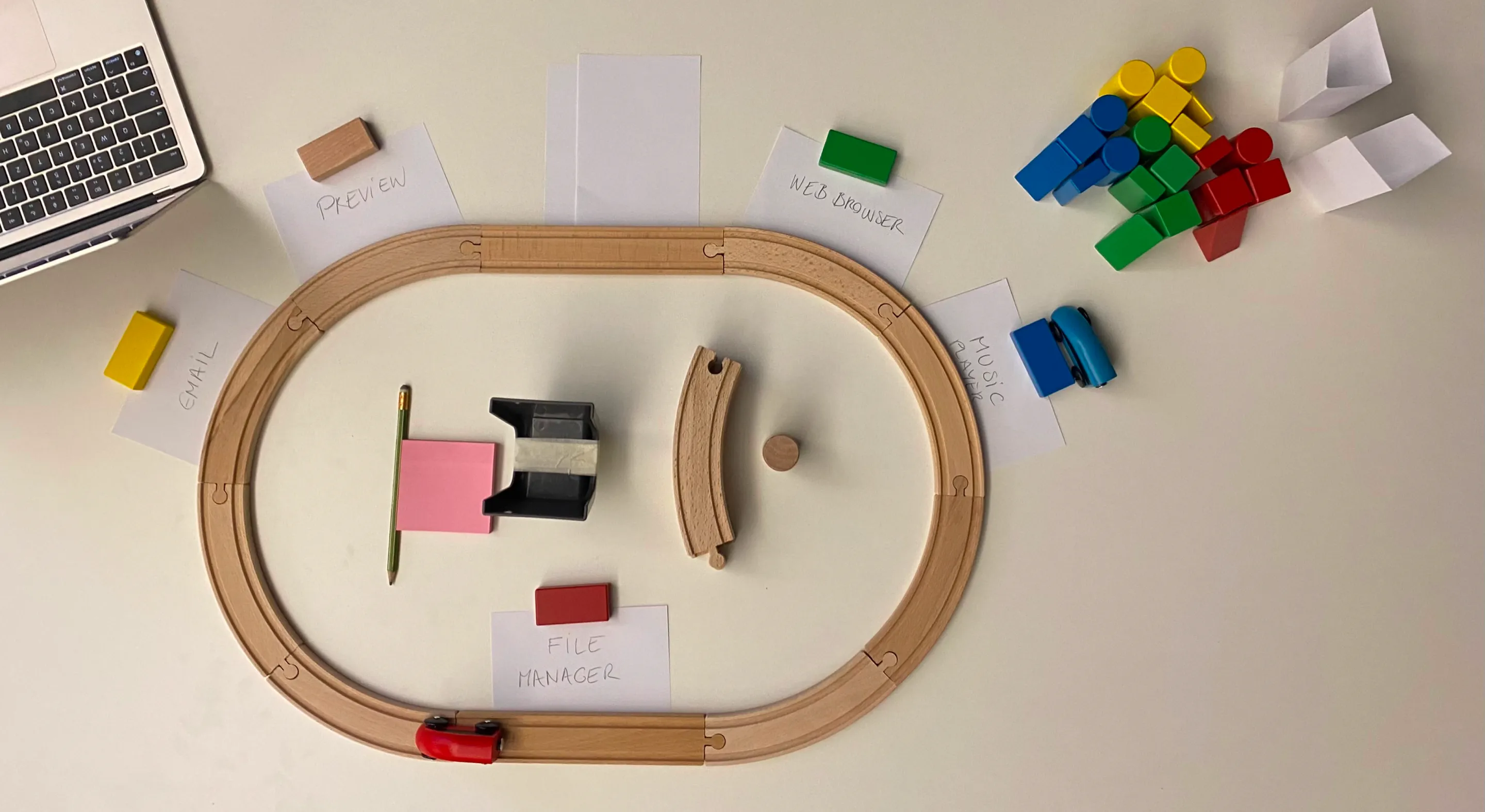

I built rough prototypes that weren't digital at all. One of them used a toy train set where each wagon held a piece of data or thought, and each station represented an app.

I wasn't trying to build a system yet.

I was first trying to unlearn habits and have fun!

The output of this exercise was that it really broke the thought process of how people think they used computers, and even put them in a sort of board game scenario.

Speculation

I used sketches, paper prototypes, and narrative scenarios to explore ideas like mood-based interfaces, attention-based interaction, or even silence as input.

Hardware felt too constraining and strict at some point, especially after trying dedicated devices like the famous Rabbit R1.

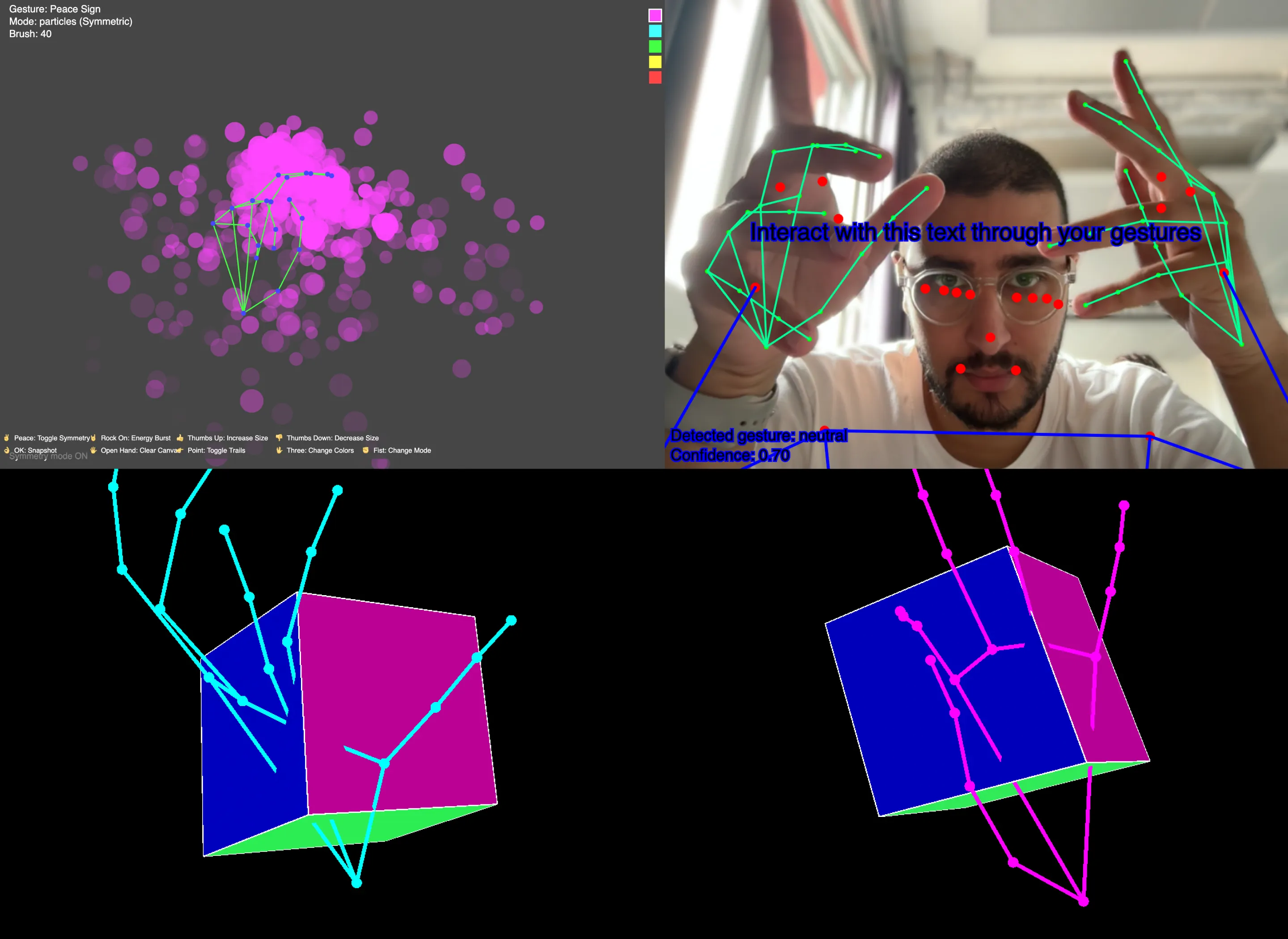

Reactivity

I worked directly with webcam and microphone input, using p5.js to create sketches that didn't wait for clicks, that listened and responded to movement, voice, proximity.

This one brought playfulness back into my project! I was actively producing serotonin as I was moving and speaking all over the place.

I had so many sketches where I could be in control and move stuff around with my hands, have quick 1:1 voice conversations with the computer, etc.

Visuals

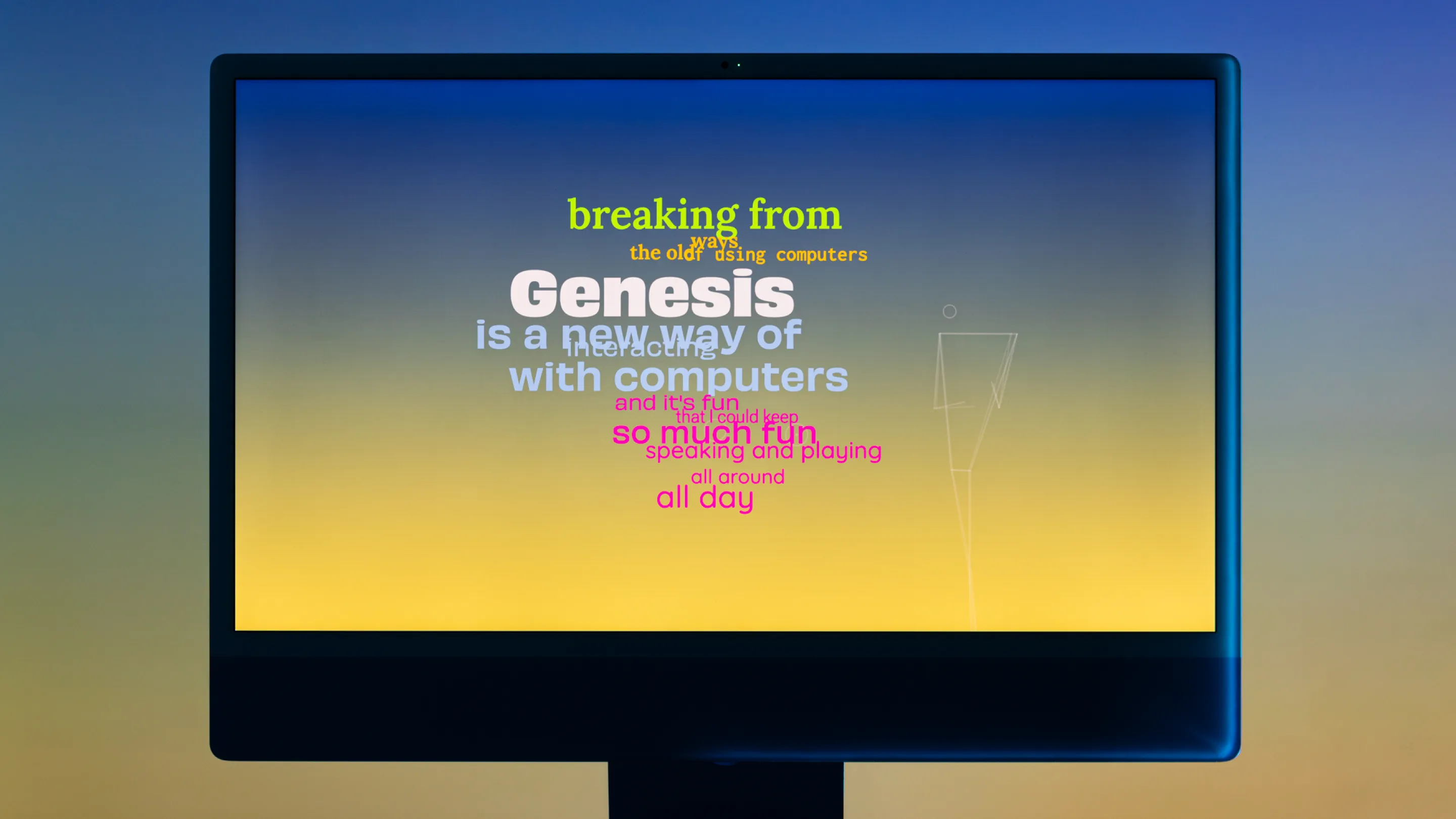

The background in the final output of Genesis is not static; it gently shifts every hour to mimic daylight changing. It is meant to feel ambient, open like a sky and less like a screen.

I wanted to make the environment feel alive in some way that didn't look the same every time you used Genesis throughout the day.

Typography

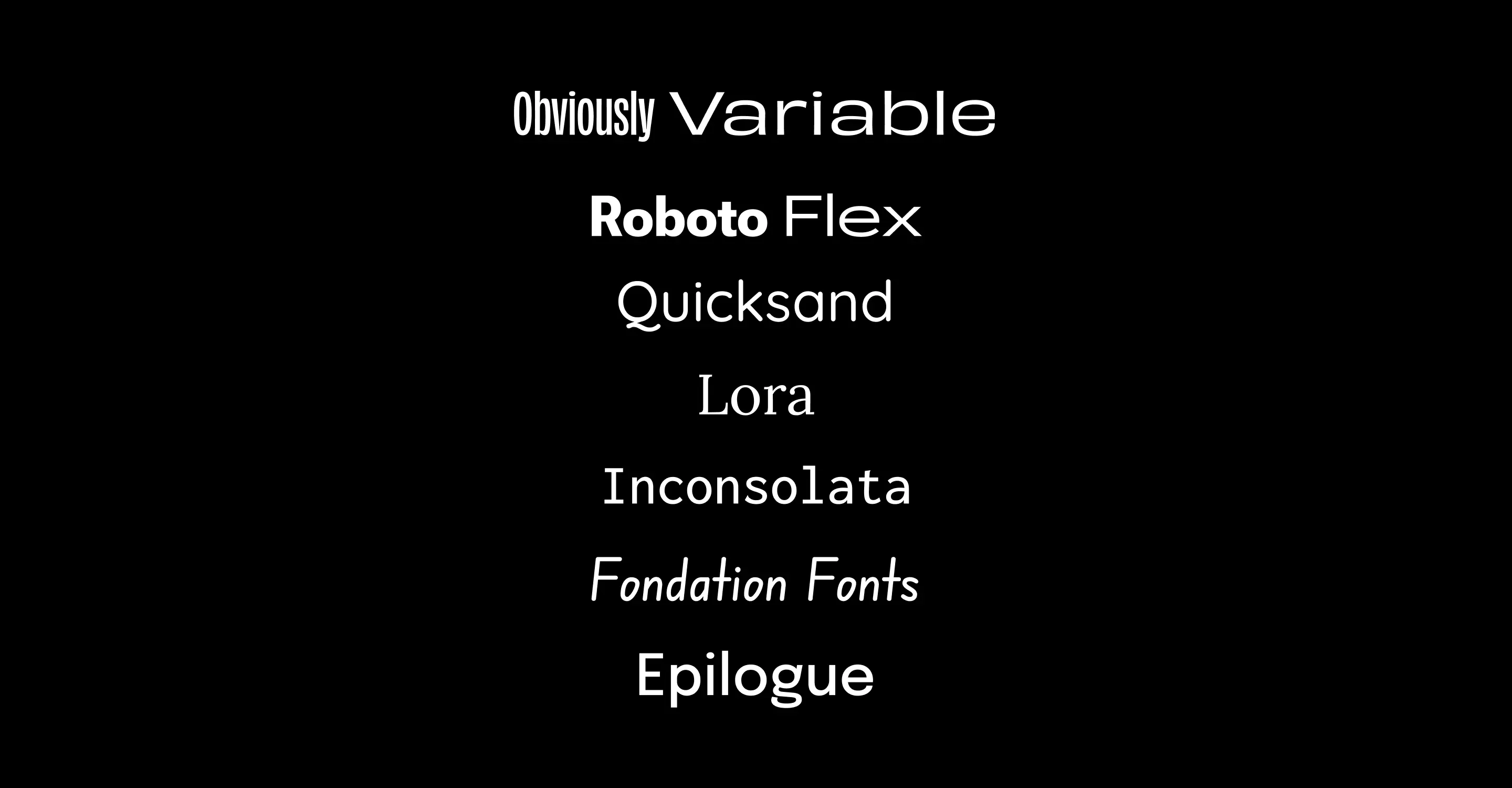

The typography in Genesis is responsive. It grows, shrinks, drifts, changes position depending on your voice's volume and your placement in space, and the typeface stays the same.

You can however cycle through six different typefaces using this gesture 🤘 allowing the tone of the space to shift with you.

The goal wasn't to overwhelm with style, but to give just enough variation for expression, without distracting from the experience of interaction itself.

User Testing

Vocabulary of Interaction

Typography

It's not about being efficient.

But it's about discovering new ground, new gestures, new conversations, new ways of being with the machine.

Conclusion

Genesis isn't trying to replace the desktop or suggest a new standard. It's a proposal and a way of asking: What else is possible if we question the structures we've come to accept?

This kind of research feels especially important now, as AI reshapes how we interact with machines, we face a choice: repeat old metaphors, or open new ones. Should we build AI that only responds to typing? Or systems that can understand gesture, voice, and shared presence?

This project made me question the structures I took for granted, folders, windows, fixed inputs (things I grew up with and are deeply rooted in how myself and many other people interact with computers) and ask what would it mean to design for the body, not just the screen?

Moving forward, I'm interested in how interaction design can support more open, shared, and expressive forms of computing, especially within the environments we already use.

As someone with a graphic design background, I really enjoyed playing and having fun with this new tool.

I also really see a potential in shifting how we design, experiment, create posters, editorial work, etc. Freeing us from using our current day to day strict tools.

One More Thing...

There's something else I haven't shown you.

When did you last create something with someone on a computer? At the same time?

You simply can't. Or you need a second computer.

Our computers isolate us. One person, one screen, one experience.

But what if they didn't?

What if two people could use Genesis at the same time? What if they're not taking turns and not waiting? What if they're creating together? thinking together, playing together in the same space?

Again, this isn't about productivity. This is about expression and the magic that happens when people explore ideas together.

I believe, this could be the road ahead for the project, enabling a multiplayer experience that puts humans and ideas first, on the same machine.